At the start of the summer Angel Business Communications, working closely with the Data Centre Alliance, organised the latest in a series of Data Centre Transformation events, held at the University of Manchester (a full report can be found later on in the digital magazine). What I found most interesting and even inspirational, was the fact that the topics up for discussion varied somewhat from your ‘average’ data centre event. Yes, there’s no escaping the fact that good old data centre facilities need to be tried and tested, and very reliable, so any new ideas and technologies need to have their tyres kicked a good few times before being accepted. However, as with the world of IT, it does seem that, when it comes to the data centre, more and more end users are becoming aware that, whatever their thoughts on the future, they cannot afford to ignore the ideas and technologies that plenty of their peers are adopting.

Yes, if you have a greenfield site, or just a greenfield company, then you have no legacy baggage, and making decisions around data centres and IT is relatively easy. For the many companies who have plenty of existing infrastructure, the path forwards might be less clear, but there’s no doubt that, if you don’t start looking at the new ideas and technologies very soon, you could well be bypassed by your competition – whether that’s the start-ups, or just your regular competitors who are moving just that little bit quicker than you.

Ultimately, it’s all about customer experience and reliability. I remember reading years ago words of the MD of one of the embryonic budget airlines, who basically said that if customers were paying so little for their flights, did it really matter if their luggage didn’t make the journey with them?(!) Now, that same budget airline which, unsurprisingly had a poor reputation, has set about addressing its reputation, thanks in large part to technology, plus a different corporate mindset, and one of it’s main rivals is now perceived to have the customer service problem.

So, park the data centre and IT for a minute, put yourself in your customers’ shoes, and work out what they can reasonably expect, in terms of price, performance and after-sales service. Then work out how you need to develop your data centre and IT real estate to deliver on this ideal. I’m fairly sure that you won’t be able to keep your customers as happy as you want them to be without a major re-evaluation of the technology that you are using right now, with a view to how this needs to change.

And if edge, IoT and Artificial Intelligence leave you cold, perhaps it’s time to retire?!

Sponsor Video

Robert Half’s new report, Digital transformation and the future of hiring has found that digital processes will be extended to manual, data entry tasks such as financial modelling (41%), generating financial reports (40%), project management and reporting (38%) within the next three years. As a result, payroll (37%), financial planning (33%), accounts payable (38%) and accounts receivable (32%) are expected to be the roles impacted by automation by 2022.

Digitalisation has already emerged as a business priority and is set to impact the future of business by offering new technologies to address threats and opportunities for a competitive advantage. Overall, 87% of executives have recognised the positive impact that the growing reliance on technology holds for organisations.

“Digitalisation will offer a new approach where labour and time-intensive processes can be shifted to allow for more value-added work to take place,” explained Matt Weston, Director at Robert Half UK. “Automation is impacting traditional business functions in a big way. Finance is no exception and professionals will need to be prepared to hold a more prominent and integrated influence on the wider business, gaining new skills that will see them through the technological shift.”

The main benefits that businesses are expecting, or already achieving from digital transformation, include improved efficiency and productivity, better decision-making and employees taking on more value add work leading to more fulfilling careers in the long-term. Overall, finance executives believe digitalisation will increase the productivity of each individual (59%), enable employees to focus less on data entry and more on the execution of tasks (53%), providing opportunities to learn new capabilities (51%).

“While a technical understanding will remain the core competence that provides professional credibility, it will need to be enhanced with soft skills,” added Peter Simons, head of future of finance, CIMA (Chartered Institute of Management Accountants). “We are already seeing this move occur within the finance department with the shift from technical to commercial skills. In the future, financial insights won’t just come from financial analysis but collaborating with other areas of the business. Traditionally labelled ‘professional services’ executives will need to engage with people, ask questions, have empathy and communicate in a compelling way to make informed business decisions.”

Research commissioned by M-Files Corporation, the intelligent information management company, has revealed that poor information management practices are preventing UK businesses from realising the true potential of mobile and remote working.

The research, which was conducted by Vanson Bourne and polled 250 UK-based IT decision-makers, found that nearly nine out of every ten (89 per cent) respondents said their staff find it challenging at least some of the time to locate documents when working outside of the office or from mobile devices. Other key findings included:

Half (51 per cent) of respondents would like to be able to access company documents and files, and/or have the ability to edit them, when working remotely/on a mobile device; 44 per cent want the ability to approve documents with digital signatures; 40 per cent are not able to share or collaborate on documents remotely.

Commenting on the findings, Julian Cook, VP of UK Business at M-Files, said: “Remote and mobile working practices are proliferating throughout organisations, and are increasingly viewed as a must-have not only by Millennials entering the workforce, but also by more established members of staff. Effectively empowering your mobile workforce can make a big difference to productivity and efficiency, but only if the tools provided have the robust information management functionality required by organisations with the ease and simplicity employees demand. It’s clear from our research that this is something that most organisations aren’t currently able to offer their employees. Many remote workers still struggle to find the information they need and because of this must find workarounds, such as unauthorised file-sharing apps just to keep the wheels moving.

“The use of unsanctioned personal file-sharing apps at work by employees increases the risk of data breaches, reduces the IT department’s visibility and can raise compliance issues. Organisations intent on pursuing mobile and remote working initiatives therefore need to focus on providing the right tools to make these activities straightforward for everyone if they are to be successful,” Cook continued.

UK business leaders identify far fewer risks affecting their businesses, when compared to Germany and France, according to research from the Gowling WLG Digital Risk Calculator, which launches today. This new free tool allows small and medium size businesses to better understand their digital risks and compare these to other businesses and industries.

Research informing the Gowling WLG Digital Risk Calculator was gathered from 999 large SMEs in the UK, France and Germany. Findings revealed an overly optimistic picture among UK business leaders, with UK respondents identifying far fewer digital risks as a threat to their business; when compared to the views of their European counterparts. UK respondents consistently identified between 2 and 25% less than non-UK respondents for each risk area analysed.

Commenting on the research Helen Davenport, director at Gowling WLG, said: “The recent wide ranging external cyber-attacks such as the Wannacry and Petya hacks reinforce the real and immediate threat of cyber-crime to all organisations and businesses.

However, there tends to be an “it won’t happen to me” attitude among business leaders, who on one hand anticipate external cyber-attacks will increase over the next three years, but on the other fail to identify such areas of risk as a concern for them. This is likely preventing them from preparing suitably for digital threats that they may face.”

Respondents revealed that external cyber risks (69%) are thought to be the most concerning category of digital threat for businesses across all countries surveyed. This risk is anticipated to grow even further, with 51% of respondents believing that it will increase within the next three years.

Other digital risks of concern to participants include customer security (57%), identity theft / cloning (47%) and rogue employees (42%). More than a third of respondents (40%) also believe that the lack of sufficient technical and business knowledge amongst employees is a risk to their business.

Additionally, one third (32%) of UK businesses feel that digital risks related to regulatory issues have increased during the past three years. However, less than a third (29%) believe that regulatory issues are a risk to their business.

Risks related to highly sensitive/valuable data are the second most prominent risk to businesses (55%), according to respondents. However, when asked about the GDPR, which represents the most significant change to data protection legislation in the last 20 years, only one seventh (14%) of UK businesses were aware of the fines they may face for failing to protect their data. In comparison, 26% of respondents from Germany and 45% from France were aware of the maximum fine, placing UK business leaders at the back of the pack when it comes to understanding the risks posed by failure to comply with the GDPR.

Despite the identification of data risks, only 52% of UK businesses do regular data back-ups, compared to 66% in Germany and 67% in France. Moreover, only 32% of UK businesses and 39% of businesses in Germany open to using off-site storage for sensitive data today, compared to 50% of French businesses.

Given the changing nature of the digital world, the majority of business leaders (70%) involve IT support in their digital risk management. However, in comparison the number that say they involve legal support drops significantly down to an average across the surveyed nations of just 31% (46% UK, 23% Germany and 23% France, respectively).

When asked about how prepared they feel for their digital risks, only 16% of all respondents stated that they are fully prepared.

Patrick Arben, partner at Gowling WLG, comments: “When affected by a cyber-attack or any other digital threat, the immediate focus is to work with IT professionals to understand what has happened. However, it is always worth taking internal or external legal advice, before commencing an investigation and as circumstances change.

The essence for all business leaders is to stop ignoring the digital risks their companies face. By doing this, they can easily and proactively work to prevent future attacks from happening.”

According to recent research conducted by the Cloud Industry Forum (CIF), UK businesses looking to embrace digital transformation consider the cloud to be a crucial cog in this process. However, the evidence also demonstrates that there is room for the cloud to evolve further, in order to mitigate lingering cloud migration challenges and a desire to keep some resources on-premises. According to HyperGrid, this is where Enterprise Cloud-as-a-Service (ECaaS) can make a difference.

The CIF research, which polled 250 IT and business decision-makers across a range of UK organisations earlier this year, found that 92 per cent of companies polled consider the cloud to be quite important, very important or critical to their digital transformation strategy, which highlights the appetite for remotely based cloud. However, the survey also revealed that 63 per cent of IT decision-makers that use cloud-based services embrace a hybrid approach, with 43 per cent saying they intend to keep at least some business-critical apps or services on-premises.

Doug Rich, VP of EMEA at HyperGrid, believes that these trends point to the need for an approach which blends the benefits of cloud-based hosting with on-premises infrastructure in a way that goes beyond historic offerings.

Rich said: “CIF’s research has underlined just how crucial cloud is in enabling digital transformation, and there is a tangible desire amongst key decision-makers to embrace it on a more wholesale basis. Its core benefits, including the flexibility of consumption-based pricing and the agility it brings by freeing up IT departments to focus on innovation, are already well-documented. Despite this trend, there remains a steadfast need for companies to keep some of their applications and data a little closer to home. Hybrid IT environments have gone some way towards addressing these concerns, but shortcomings in the way we approach cloud and on-premises infrastructure remain. What businesses need is a service that effectively combines the best of both worlds in a way that has not been achieved before.”

To illustrate this point, the CIF research also found that 52 per cent of IT decision-makers found complexity of migration a difficulty when moving to a cloud solution. Alongside this, 48 per cent said privacy or security concerns are a barrier to digital transformation, with 50 per cent citing investments in legacy systems as a hurdle. These figures demonstrate how organisations are frequently obliged to keep at least some of their infrastructure on-premises, and how the available cloud solutions make it difficult for them to strike this balance.

Rich added: “It’s a near-impossible task to persuade businesses to migrate all of their data and applications to a remotely cloud-based solution. With this in mind, ECaaS is set to play a key role in defining how companies embrace cloud in the future. This solution goes a step further than current hybrid cloud arrangements, by enabling applications and resources stored in both public and private clouds to be easily managed from one central location. Crucially, ECaaS allows for the installation of public cloud on-premises, enabling businesses to benefit from a consumption-based usage model and third-party management of the infrastructure, while also gaining the security and peace of mind offered by keeping infrastructure on-premises.”

Doug Rich concluded: “Embracing digital transformation is vital if organisations want to maintain competitive advantage. Rather than spending valuable IT time and resources on figuring out how to reconcile cloud adoption with the retention of legacy infrastructure, decision-makers should look at how the cloud is evolving to bridge this gap whilst driving innovation.”

According to new research from thermal risk experts EkkoSense, almost eight out of ten UK data centres are currently non-compliant with recent ASHRAE Thermal Guidelines for Data Processing Environments.

EkkoSense recently analysed some 128 UK data centre halls and over 16,500 IT equipment racks – the industry’s largest and most accurate survey into data centre cooling - to reveal that 78% of UK data centres currently aren’t compliant with current practice ASHRAE thermal guidelines.

The ASHRAE standard – published in the organisation’s ‘Thermal Guidelines for Data Processing Environments – 4th Edition’ – is highly regarded as a best practice thermal guide for data centre operators, offering clear recommendations for effective data centre temperature testing. ASHRAE suggests that simply positioning temperature sensors on data centre columns and walls is no longer enough, and that data centre operators should – as a minimum – be collecting temperature data from at least one point every 3m to 9m of rack aisle. ASHRAE also suggests that unless components have their own dedicated thermal sensors, there’s realistically no way to stay within target thermal limits.

“ASHRAE’s recommendations speak directly to the risks that data centre operators face from non-compliance, and almost all operators use this as their stated standard. Our own research reveals that 11% of IT racks in the 128 data centre halls we surveyed were actually outside of ASHRAE’s recommended range of an 18-27º C recommended rack inlet temperature - even though this range was the agreed performance window that clients were working towards. We also found that 78% of data centres had at least one server rack that lay outside that range – effectively taking their data centre outside of thermal compliance,” explained James Kirkwood, EkkoSense’s Head of Critical Services.

“Unfortunately the problem for the majority of data centre operators that only monitor general data centre room/aisle temperatures is that average measurements don’t identify hot and cold spots. Without a more precise thermal monitoring strategy and the technologies to support it, organisations will always remain at risk – and ASHRAE non-compliant – from individual racks that lie outside the recommended range. That’s why the introduction of the latest generation of Internet of Things-enabled temperature sensors – introduced since the initial publication of ASHRAE’s report – is likely to prove instrumental in helping organisations to cost-effectively resolve their non-compliance issues,” continued James.

This latest EkkoSense research follows on from recent findings that suggested the current average cooling utilisation level for UK data centres is just 34%. According to James Kirkwood: “our research shows that less than 5% of data centres are actively monitoring and reporting individual rack temperatures and their compliance. The result is that they therefore have no way of knowing if they are actually truly compliant – and that’s a major concern when it comes to data centre risk management.”

Given that UK data centre operators continue to invest significantly in expensive cooling equipment, EkkoSense suggests that the cause of ASHRAE non-compliance is not one of limited cooling capacity but rather the poor management of airflow and cooling strategies. EkkoSense directly addresses this issue by combining innovative software and sensors to help data centres gain a true real-time perspective through the modelling, visualisation and monitoring of thermal performance. Using the latest 3D visualisation techniques and real-time inputs from Internet of Things (IoT) sensors, EkkoSense is able – for the first time – to provide data centre operators with an intuitive, 3D real-time of their data centre environment’s physical and thermal dynamics.

Research reveals the secrets of essential DC Infrastructure.

Tony Lock, Director of Engagement and Distinguished Analyst, Freeform Dynamics Ltd, August 2017

There are very few organisations now where the demands and expectations placed on IT services don’t increase daily. This creates great pressure on the datacentre, and not just on the server and storage estates: a recent report (http://www.freeformdynamics.com/fullarticle.asp?aid=1955) by Freeform Dynamics clearly shows similar demands impacting the core power, cooling and support infrastructure. So how well are datacentre managers dealing with these pressures?

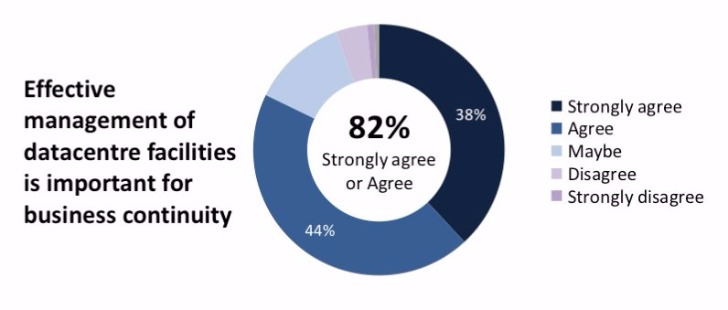

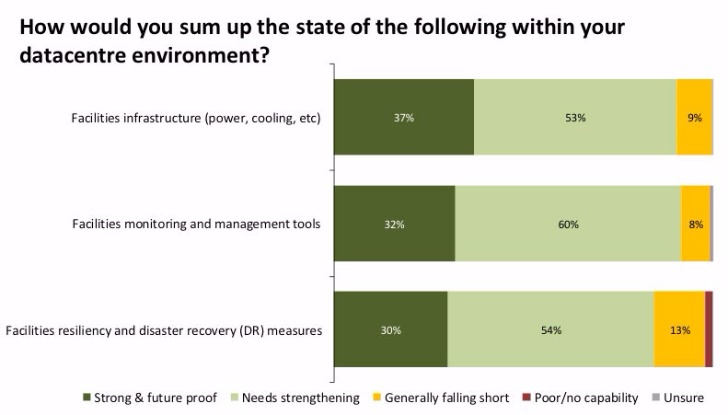

Unsurprisingly our report indicates very clearly that a significant majority of respondents acknowledge that the effective management of datacentre facilities is important, if not vital, to ensure business continuity. (Figure 1.)

The research indicates that business operations now depend on IT to such a degree that many organisations recognise that they absolutely must improve the availability of such systems. Virtualisation, “cloud solutions” and significant improvements to workload management have had a positive impact, but they all fundamentally rely on the underlying power and facilities systems: unless these are ready to support continuous operations without service interruption, all that hard work at the IT level could be rendered redundant.

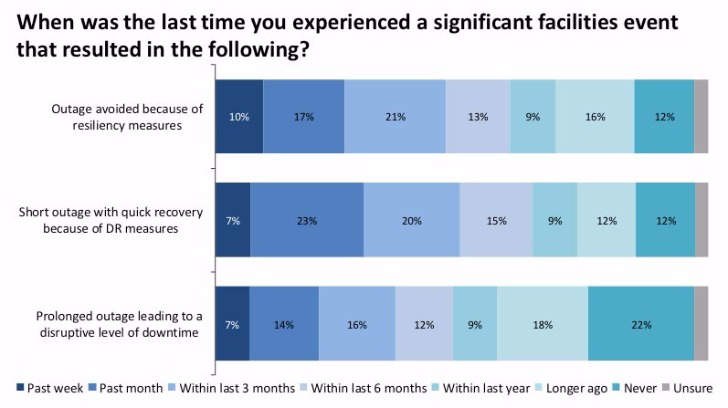

Which makes it all the more surprising that many organisations still experience IT service interruptions because of problems in the facilities infrastructure that supports datacentre operations. Indeed, around a third of respondents said that they have experienced “disruptive” levels of downtime because of facilities-related events within the past three months. (Figure 2.)

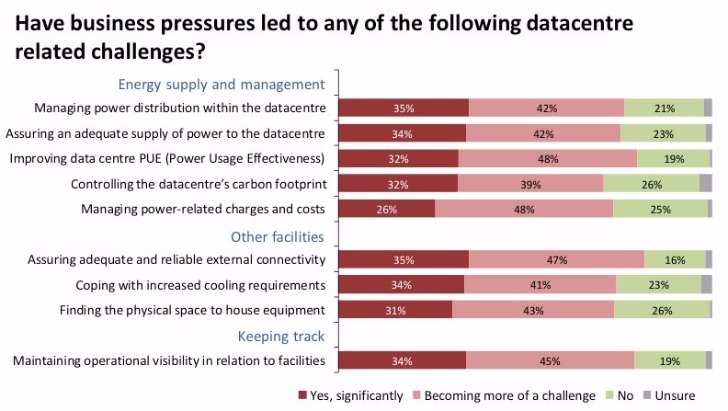

The fact that outages still take place regularly, despite investments in IT continuing apace, is a problem for businesses of all types and size, but more particularly for the datacentre managers who must keep things running smoothly. And this challenge is becoming ever more critical and visible, as business demands and expectations continue to ramp up the pressure on many components in the power and cooling infrastructure (Figure 3.).

The vast majority of respondents indicated that they see considerable, and growing challenges in everything, from the supply of energy to the datacentre, through handling expanding cooling requirements, to managing power distribution within the DC. These challenges often go hand in hand with the pressure to manage and reduce datacentre-related costs and charges.

Elements that in previous years may have fallen into the “nice if you could do something about it” category, such as reducing the carbon footprint and improving datacentre PUE are now a significant challenge for around a third of DC managers, and a growing concern for many others. It is apparent that after many years of green drivers being largely theoretical, accounting is now making them real.

As the pressure on core datacentre facilities increases, the survey results indicate that a clear majority believe that, at the very least, power and cooling facilities need to be strengthened. Even greater numbers accept that they should improve the monitoring and management tools used in the running of these systems. (Figure 4.)

While many organisations acknowledge that they need to strengthen their datacentre infrastructure, the research also indicates that considerable numbers also need to better understand the options now available to them, especially in terms of power management. However, it can easily be argued that even those organisations that feel they are in a stronger position here will also need to invest, as the evolution of power and infrastructure management technologies continues.

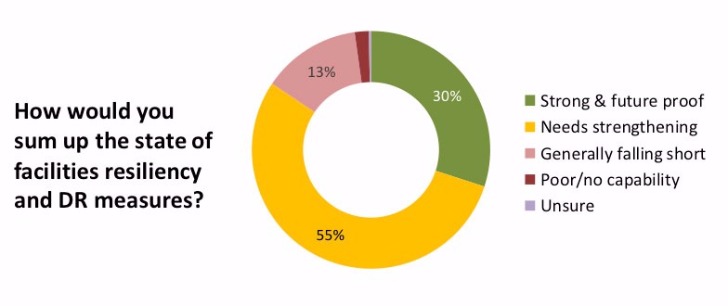

This is summed up very clearly when we look at the state of existing facilities resilience and recovery systems, where the report shows that around one in seven are generally falling short or have no DR capabilities whatsoever. (Figure 5)

These numbers are reflected in the survey when looking at levels of confidence in a range of existing capabilities. Over 60 percent of those surveyed reported they have at best only ‘partial’ confidence that power management systems are well designed, that they have access to the skills and expertise they need or that they can recover quickly in the event of power-related incidents and events. But perhaps the most worrying response, although by no means unexpected, is that only a third of those surveyed are fully confident they have adequately tested their ability to deal with power related failures.

No one doubts that most businesses depend on their IT systems, and that these in turn rely on the underlying datacentre power, cooling and other support facilities. Yet the evidence is that this critical foundation does not receive the attention and investment needed to ensure it can support an expanding range of business services. As in all areas of IT, and in business more generally, getting hold of people with the skills and experience needed to ensure things operate smoothly is a challenge for many. Perhaps only one task is more daunting: getting the time to test recovery processes and make sure they work. It’s a common challenge, but one that has the potential to deliver huge value. Getting business buy-in to adequate testing of recovery capabilities is essential. To get hold of the report, please visit (http://www.freeformdynamics.com/fullarticle.asp?aid=1955).

Angel Business Communications is pleased to announce the categories for the SVC Awards 2017 - celebrating excellence in Storage, Cloud and Digitalisation. The 30 categories offer a wide-range of options for organisations involved in the IT industry to participate. Nomination is free of charge and must be made online at www.svcawards.com

Infrastructure

Cloud

By Steve Hone, DCA CEO and Cofounder of the Data Centre Trade Association

The DCA summer edition of

the DCA journal focuses on Research and Development and I’m pleased to say we

have received some great articles this month. Research leads to increased

knowledge and the ability to develop and innovate, the benefits of this

investment was plain to see in July in Manchester at the DCA annual conference.

The DCA’s update seminar on the 10th was not only an opportunity to bring DCA members up to speed with the work undertaken to date but also to share the plans for the future in its continued support of members and the data centre sector.

The Seminar also provided an opportunity for members to gain updates from some of the DCA’s strategic Partners including Simon Allen who spoke about the new DCiRN (Data Centre Incident Reporting Network) and Emma Fryer who provided an update on the valuable work Tech UK do in supporting of the data centre sector, this was followed by networking drinks in the evening.

On the 11th July the DCA hosted its 7th Annual Conference which took place at Manchester University. Data Centre Transformation Conference 2017 organised in association with DCS and Angel Business Communications was a huge success and continues to go from strength to strength.

The quality of content and healthy debate which took place in all sessions was testament as to just how well run the workshops were; So, I would also like to say a big thank you to all the chairs, workshop sponsors and the committee who worked so hard to ensure the sessions were interactive, lively and educational.

The workshop topics covered subject matter from across the entire DC sector, however research and development continued to feature strongly in many of the sessions which is not surprising given the speed of change we are having to contend with as the demand for digital services continues to grow.

Having seen the feedback sheets from all the attending delegates it was clear that a huge amount was gained from the day, not just in respect of contacts and knowledge but also the insight gained from speaking too and listening to others who share the same issues and same business challenges. One delegate said it was “refreshing to come to an event where he felt comfortable enough to speak out and learn on his own terms, without feeling we was being sold too”. High praise indeed so thankyou to all the delegates who attended and helped make the day such a success.

We closed the conference with a sit-down dinner in the evening with good food & wine served by the university students and of course great company which for some meant we were still out to watch the sun come up!

Although some will be taking the opportunity to slip away to recharge their batteries; you still have time to submit articles for the DCM buyers guide; the theme is “Resilience and Availability”;

Copy deadline date for this is the 20th August. There is also still space for copy in the next edition of the DCA journal with a theme of Smart Cities, IOT and Cloud which always seems to be a popular subject matter, copy deadline for this is the 12th September. Please forward all articles to Amanda McFarlane (amandam@datacentrealliance.org) and please call if you have any questions.

By Dr Jon Summers, University of Leeds, July 2017

For the last six years at the University of Leeds a group of researchers in the Schools of Mechanical Engineering and Computing have been trying to deal with the extremely complex question of how to manage simple thermodynamics and fluid flows derived from very complex digital workloads.

It is a question that does now need to be addressed and this can only be achieved using real live Datacom equipment – servers, switches and storage. Data centres should really be considered as integrated, holistic systems, where their prime function is to facilitate uninterrupted digital services. Would you go out and purchase a car with all of its technology of aerodynamics, road handling, crashworthiness and engine management without an ENGINE, which you as the driver would need to specify and source for engine size, shape, capacity and performance? No you would not, they are integrated systems. The same is true of data centres – the engine of the car is equivalent to the datacom, which is key to the provision of the intended function of the system. That is why research and development around providing the facility for the datacom should actually use real live datacom.

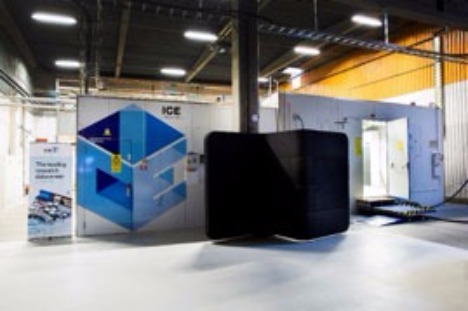

The group at the University of Leeds have in the past received funding from data centres, namely Digiplex and aql to develop experimental setups that can lead to a better understanding of the Datacom operation and performance with differing thermal and fluid flow scenarios. With the involvement of Digiplex we constructed a large data centre cube with a hot and cold aisle arrangement and have run this with live Datacom and some Hillstone loadbanks, the latter has been shown as a 4U proxy to replicate (with one fan and one heater) 4 times 1U pizza box servers operating at full capacity. The data centre cube is shown in Figure 1 and to augment this activity of thermal management of real Datacom in a live data centre environment, a generic server wind tunnel supported by the Leeds based data centre and hosting company, aql. The wind tunnel offers finer control on the thermal and airflow aspects of management Datacom and is shown in Figure 2.

Figure 1: Left shows a front view of the cube with a standard wind tunnel connected to the left of the cube. Right highlights the connection of the wind tunnel exhaust to the inlet to the cold aisle of the data centre cube.

The generic server wind tunnel offers the capability to test Datacom equipment at different inlet temperatures and humidity, although the latter is not easily controlled. The equipment has enabled the team to look at Datacom performance in terms of power requirements when the facility fan pressurises the cold aisle. Both the cube and the wind tunnel have helped to look at the effects of pressure and airflow on the Datacom delta temperature between front and back.

Figure 2: Left shows the exit of the generic server wind tunnel. Right shows the full extent of the wind tunnel with the working cross section.

The work at Leeds has come to the attention of the nearly 2 year old Data Centre research and development group that operates as part of Government Research Institutes in Sweden, namely SICS North, under the leadership of Tor Bjorn Minde. We are now forging a strong collaboration between SICS North and the University of Leeds with the exchange of expertise with my taking up a study leave for two years at SICS North, where we will continue to grow integrated and holistic data centre research and development using live Datacom.

Figure 3 shows the two new data centre pods with real Datacom available for a number of research projects around data centre control, operation and performance. The figure shows SICS ICE module 1 to the left, which houses the world’s first open Hadoop-based research data centre offering the capability to do open big data research. Module 2 to the right is a flexible lab with 10 rack much like the cube but with additional functionalities.

Figure 3: The two data centre pods at SICS North, Sweden, with SICS ICE on the left housing the open Hadoop-based research data centre.

By combining the expertise at Leeds on thermal and energy management of a myriad of Datacom systems with the data centre operational capabilities at SICS North, we anticipate to be able to offer a stronger understanding of the integration of the Datacom with the Data Centre facilities at a time of great need.

Acknowledgements

I would like to acknowledge the contribution of PhD student Morgan Tatchell-Evans and my colleague Professor Nik Kapur for the design and construction of the data centre cube with kind support from Digiplex and PhD student Daniel Burdett for the design and construction of the generic server wind tunnel and generous support from AQL.

Dr. Jon Summers is a senior lecturer in the School of Mechanical Engineering at Leeds. During the last 20 years, he has worked on a number of government and industry funding projects which have required different levels of computational modelling. Since 1998, having built and managed compute clusters to support many research projects, Jon now chairs the High-Performance Computing User Group at Leeds University and is no stranger to high performance computing having developed software that uses parallel computation. Applications of his modelling skills have led to publications in the areas of process engineering, tribology, through to bioengineering and as diverse as dinosaur extinction. In the last three to four years Jon’s research has focussed on a range of air flow and thermal management and energy efficiency projects within the Data Centre, HVAC and industrial sectors.

By Mark Fenton, Product Manager at Future Facilities

When one of our developers, Bo Xia at Future Facilities HQ put on the Oculus Rift headset for the first time, we were skeptical about what his reaction would be.

To the rest of the team watching from the real world, it was a curious scene: Bo was standing in our office strapped into a VR headset, moving his head and arms around wildly. But Bo had been transported and was now completely immersed in one of our data centre models—walking down the aisles, looking at live power consumption and watching simulated airflows. He was experiencing for the first time a fully-immersive data centre simulation. He took off the headset and delivered his verdict with a huge grin: “Amazing!”.

Everyone we have delivered the Rift experience to has had this reaction. Often, this has been their first experience of VR and so has come with a healthy level of skepticism and even trepidation towards the technology. What is amazing is watching how quickly that melts away once they are transported to a rooftop chiller plant or back in time to an IBM mainframe facility. Once immersed, there is full freedom to explore the data centre as you please. Walk an aisle, fly through the duct system, watch airflows or engage with any asset of interest. You quickly forget the limitations of being human and fly up to get a bird’s eye view before diving into the internals of a cabinet.

This fully-flexible experience may be the foundation of almost unlimited opportunities for our data centre ecosystem: designers walking clients around their concepts, colocation providers selling a proposed cage layout, upper management touring their investment, facility engineers troubleshooting their own sites and much more. It’s clear that VR will not only change the way we visualise our data centres but more excitingly, it will change the way we work with them as well.

For operational sites, VR will naturally progress to AR (augmented reality), where performance data can be overlaid onto the real world. Imagine walking through your data centre, putting on your AR glasses and superimposing live DCIM data or simulation results directly onto your view. With human error causing the largest percentage of data centre outages, AR could be invaluable in training and assisting site staff to ensure fewer mistakes are made.

When looking at cooling performance, site staff could visualise the airflow around overheating devices to fully understand the thermal environment - and then interactively make improvements. In addition, IT and Facilities could use this technology to proactively visualise their next deployments, a maintenance schedule or even a worst-case failure. VR offers a fully-immersed testbed, where you can experience first-hand the engineering impact of any data centre change you’re planning to make.

So what about when you can’t physically walk around the data hall floor? With the rapid growth of IoT and edge computing, there is a drive towards smaller local facilities that provide low-latency connectivity between users and their cloud requirements. From autonomous cars to the next Pokemon Go, there is an exponentially-increasing volume of data being produced, and an unwavering pursuit towards faster connectivity to make use of it.

This trend towards larger cloud data centres supported by a discretized network of hundreds - or even thousands - of remote edge sites will be a significant management challenge. This lends itself beautifully to the VR world: VR provides remote operators the tools to assess alarms and find faults, then make adjustments to mitigate the risk of downtime - all from the comfort of their chair. This concept was demonstrated by Vapor IO at the recent Open19 launch: they showed a Vapor chamber being used in a remote edge location, streaming live data from OpenDCRE and simulation airflow from our own 6SigmaDCX software.

The future of data centres is embarking on an exciting journey towards higher demand, local edge connectivity and a fully-connected IoT world. Engineering simulation techniques will ensure these sites can deliver the highest number of applications with the lowest energy spend - all with no risk to downtime. Combining the power of simulation with VR will allow data centre professionals to engage and immerse themselves in their remote environments and, for the first time, truly understand the impact of any change they wish to make. VR certainly has the ‘wow’ factor, but it is becoming increasingly clear the technology will also provide a huge benefit to the running and optimising of the next generation of data centres.

By Professor Xudong Zhao, Director of Research, School of Engineering and Computer Science University of Hull

It is universally acknowledged that the cooling systems consume 30% to 40% of energy delivered into the Computing & Data Centres (CDCs), while electricity use in CDCs represents 1.3% of the world total energy consumption. The traditional vapour compression cooling systems for CDCs are neither energy efficient nor environmentally friendly.

Several alternative cooling systems, e.g., adsorption, ejector, and evaporative types, have certain level of energy saving potential but exhibit some inherent problems that have restricted their wide applications in CDCs.

One of the most promising directions is the application of the dew point cooling system, which has been widely used in other industrial fields potentially has the highest efficiency (20-22 electricity based COP) over other cooling systems if designed properly.

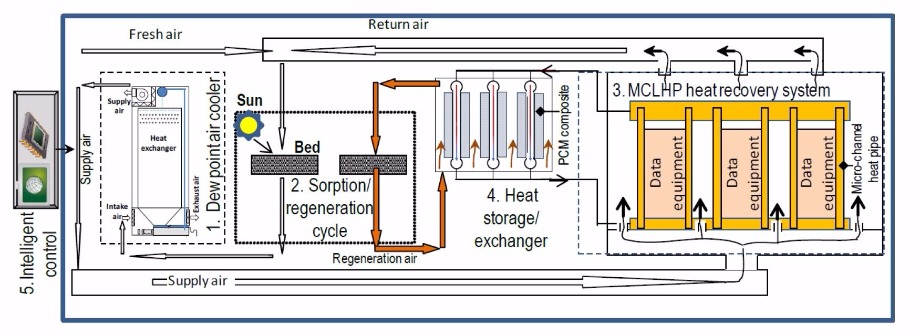

To promote its application in CDCs, an international and inter-sectoral research team, led by University of Hull and supported by the DCA Data Centre Trade Association, has been formed to work on a joint EU Horizon 2020 research and innovation programme dedicated to developing the design theory, computerised tool and technology prototypes for a novel CDC dew point cooling system. Such a system, included critical and highly innovative components (i.e., dew point air cooler, adsorbent sorption/regeneration cycle, microchannel loop-heat-pipe (MCLHP) based CDC heat recovery system, paraffin/expanded-graphite based heat storage/ exchanger, and internet-based intelligent monitoring and control system), it is expected to achieve 60% to 90% of electrical energy saving and is expected to have a comparable initial price to traditional CDC air conditioning systems, thus removing the above outstanding problems remaining with existing CDC cooling systems.

Five major parts in the innovated system, as shown in Fig. 1, are being jointly developed by several organizations of the research team, including:

(1) a unique high-performance dew point air cooler;

(2) an energy efficient solar and (or) CDC-waste-heat driven adsorbent sorption/desorption cycle containing a sorption bed for air dehumidification and a desorption bed for adsorbent regeneration; both are functionally alternative;

(3) a high efficiency micro-channels-loop-heat-pipe (MCLHP) based CDC heat recovery system;

(4) a high-performance heat storage/exchanger unit; and

(5) internet-based intelligent monitoring and control system.

Fig. 1 Schematic of the CDC dew point cooling system

During operation, mixture of the return and fresh air will be pre-treated within the sorption bed (part of the sorption/desorption cycle), which will create a lower and stabilised humidity ratio in the air, thus increasing its cooling potential. This part of air will be delivered into the dew point air cooler. Within the cooler, part of the air will be cooled to a temperature approaching the dew point of its inlet state and delivered to the CDC spaces for indoor cooling. Meanwhile, the remainder air will receive the heat transported from the product air and absorb the evaporated moisture from the wet channel surfaces, thus becoming hot and saturated and being discharged to the atmosphere.

As the adsorbent regeneration process requires significant amounts of heat while the CDC data processing (or computing) equipment generate heat constantly, a micro-channels-loop-heat pipe (MCLHP) based CDC heat recovery system will be implemented. Within the system, the evaporation part of the MCLHP will be stuck to the enclosure of the data processing (or computing) equipment to absorb the heat dissipated from the equipment, while the absorbed heat will be released to a dedicated heat storage/exchanger via the condenser of the MCLHP.

The regeneration air will be directed through the heat storage/exchanger, taking away the heat and transferring the heat to the desorption bed for adsorbent regeneration, while the paraffin/expanded-graphite within the storage/exchanger will act as the heat balance element that stores or releases heat intermittently to match the heat required by the regeneration air. It should be noted that the heat collected from the CDC equipment and (or) from solar radiation will be jointly or independently applied to the adsorbent regeneration, while the system operation will be managed by an internet-based intelligent monitoring and control system.

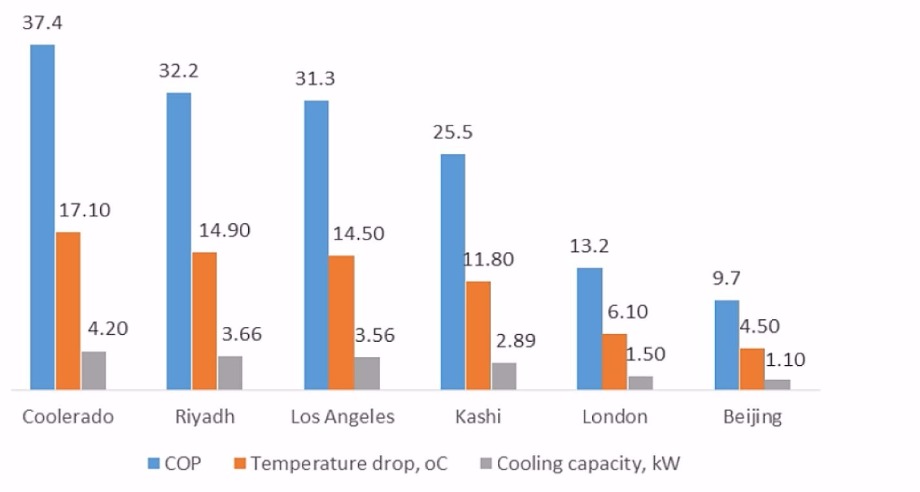

This super high performance has been validated by simulation and the prototype experiment carried out in Hull and other partners’ laboratories. The coefficient of performance (COP) of the proposed dew point cooling system reaches as high as 37.4 in ideal weather condition, while the average COP of traditional cooling system is around 3.0. The tested performance of the new system at various climatic conditions are depicted in Fig. 2.

Fig.2 Performance of the super performance dew point cooler at various climatic condition.

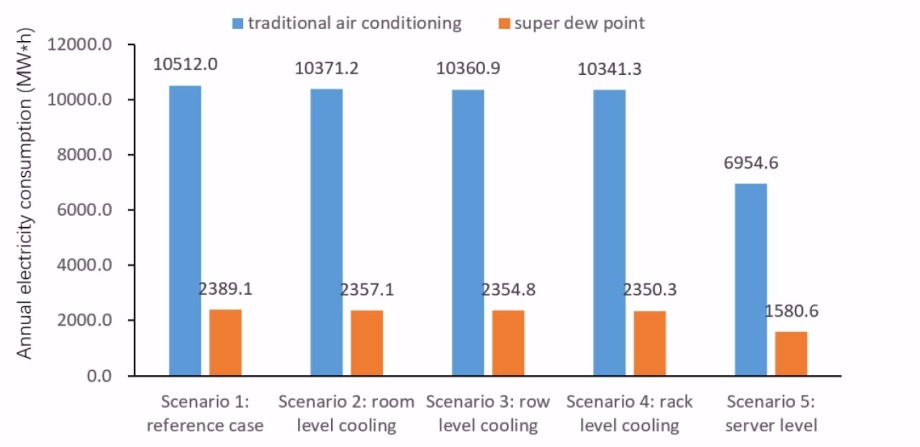

The dynamic simulation was also carried out under UK (London) climate for 4 scale type of CDCs (i.e. small, medium, large, super) and 5 application scenarios (room space level, row level, rack level, server level). The result shows dramatic annual electricity saving compare to the reference cases with traditional cooling plans, especially for the application at server level cooling. The annual energy consumption comparison for large scale CDC is provided as an example shown in Fig 3. The results also show that the bigger the CDC`s scale the more electricity would be saved by applying the super dew point air conditioning system.

The estimated annual electricity saving for the reference Data centres in UK were:

Fig.3 The annual energy consumption comparison of tradition and new cooling system for large scale CDC at various application scenario.

To summarise, the development, test and demonstration of the innovative super performance dew point cooling system for CDCs are going to be completed by 2020. The wide application of such high-performance cooling system will overcome the difficulties remaining with existing cooling systems, thus achieving significantly improved energy efficiency, enabling the low-carbon operation and realizing the green dream in CDCs.

Professor Xudong Zhao, BEng. MSc. DPhil. CEng. MCIBSE, is a Director of Research and Chair Professor at the School of Engineering and Computer Science, University of Hull (UK), and has enjoyed the global reputation as a distinguished academia in the areas of sustainable building services, renewable energy, and energy efficiency technologies. Over the 30 years of professional career, he has led or participated in 54 research projects funded by EU, EPSRC, Royal Society, Innovate-UK, China Ministry of Science and Technology and industry with accumulated fund value in excess of £14 million, 40 engineering consultancy projects worth £5 million, and claimed five patents. Up to date, he has supervised 24 PhD students and 14 postdoctoral research fellows, published 150 peer-reviewed papers in high impact journals and referred conferences; involved authorization of three books, chaired, organized, gave keynote (invited) speeches in 20 international conferences.

By Matthew Philo Product Manager – Denco Happel

The data centre industry continues to develop and innovate at a pace like no other, but this does not change core principles for the operations managers; they want simplicity and efficiency, but never at the cost of reliability. Matthew Philo, Denco Happel’s Product Manager CRAC, explains why these principles were central during the testing and development of their new free cooling solution.

As energy costs continue to rise, data centre owners and managers are looking for ways to reduce the amount of energy used by both IT and supporting infrastructure. Considering that the energy used for climate control and UPS systems can be around 40% of a data centre's total energy consumption1, efficient cooling systems can significantly cut carbon footprints and energy bills. Over the past year, we have been looking at a new way of combining free cooling with the reliability of mechanical cooling technology to help IT managers improve their Data Centre Infrastructure Efficiency (DCiE) and Power Usage Effectiveness (PUE).

The existing Multi-DENCO® range was taken as a starting point, which had introduced inverter compressors so that we could match heat rejection exactly to the room requirements. Whilst a data centre operates in a 24/7 environment, the cooling requirements vary throughout the day and across different seasons. This means that many units spent most of their life in part-load conditions, below 100% output.

It therefore made sense to increase efficiency at lower conditions to reap the biggest benefits. When we incorporated variable technology, such as EC fans and inverter compressors into our refrigerant-based, direct expansion (DX) Multi-DENCO® solution, it provided the opportunity to reduce energy consumption because it benefits from the ‘cube root’ principle. A good rule of thumb is a 20% reduction in speed will give a 50% reduction in energy consumption. This means if you can operate 80%, rather than 100%, very quickly you see your energy consumption halving.

However, this did not mean our progress on efficiency had finished. We realised that we could deliver further energy savings by exploiting the variability of the outdoor environment - in particular when it gets colder.

We knew that we needed to keep the full DX circuit within our design, to give the reliability that was required by our customers. But a refrigeration circuit does not benefit greatly from colder weather, so we focused on using indirect free cooling to provide suitably cold water to the indoor unit.

Outside of peak summer temperatures, this water circuit would reduce or remove the need for mechanical cooling (i.e. a direct expansion circuit), and the Multi-DENCO® F-Version was born.

In typical indoor conditions, 100% of the cooling requirements could be provided by the free-cooling circuit up to an outdoor temperature of 10°C. If the unit is operating in part-load conditions, it can continue to fully meet a datacentre’s cooling needs beyond this temperature, which means that the DX circuit’s compressor can be switched off for longer to save energy. To maintain the unit’s reliability, the free-cooling water circuit was kept separate to ensure that the DX circuit could operate independently if it was needed to fully meet the cooling load. A new EC water pump was chosen to give variable control and deliver the same energy-saving benefits offered by other models in the Multi-DENCO® range.

Whilst the benefits of 100% free cooling are easy to understand, the significant advantages of the mixed-mode operation can be easily overlooked. Mixed-mode, where both the free cooling and the direct expansion operate simultaneously, can be until 5 degrees below the indoor environment’s set point (for example a set point of 30°C would allow mix-mode until 25°C), which in Europe can be a large percentage of the year.

During those many hours of mix-mode operation, the ‘cube root’ principle is being exploited. The free cooling circuit may only be contributing a small percentage of the cooling, but it is also reducing the operating condition of the direct expansion circuit. As mentioned early, if the free cool circuit can provide 20% of what is required, then this is 20% less for the direct expansion circuit. This means the direct expansion circuit will save 50% in energy consumption when the unit is in mix-mode.

Energy consumption continues to be a key factor in the operational cost of a data centre. As datacentres come under mounting pressure to increase performance, managers want to take advantage of any efficiency options available. By combining the reliability of a direct expansion circuit with a simple indirect free cooling circuit, energy efficiency can be improved without risking interruptions to a datacentre’s critical operations.

For more information on DencoHappel’s Multi-DENCO® range, please visit http://www.dencohappel.com/en-GB/products/air-treatment-systems/close-control/multi-denco

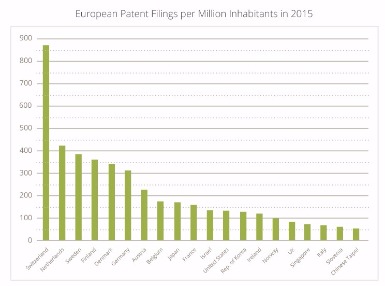

A recent document published by the European Patent Office (EPO) includes a graph which claims to be “measuring inventiveness” of the world’s leading economies using the ratio of European patent filings to population[1].

The data, reproduced in the graph (top right), shows the number of European patent filings per million inhabitants in 2015. Switzerland comes out on top, with 873 applications per million inhabitants, whilst the UK sits 16th on the list with only 79 applications per million inhabitants. This means that Switzerland has over ten times as many European patent filings as the UK, per million inhabitants.

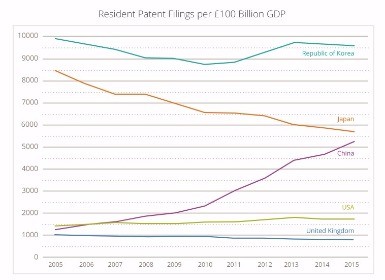

Additional data, provided by the World Intellectual Property Organisation (WIPO)[2], shows resident patent filings per £100bn GDP for the last 10 years - see the graph (bottom right). The UK is at the bottom of the pile, flat-lining at only about one filing per £100m GDP. In 2015, the USA beat the UK by a factor of about two and Korea beat the UK by a factor of over ten.

These graphs show slightly different things. One shows European patent filings, the other shows resident patent filings (i.e. filing in a resident’s “home” patent office). However they both make the same point loud and clear - UK companies file significantly fewer patent applications, in relative terms, than their competitors in other countries.

What is less clear is why the numbers are so low. Broadly speaking, there are two possible explanations.

One is that the UK really is less inventive than the rest of the world - as the EPO graph would have you believe. We would like to think that’s not true - the UK is renowned in the world of innovation, with UK inventors famously having invented the telephone, the world wide web, and recently even the holographic television, to name but a few.

A more plausible explanation is that the UK has a different patent filing “culture”, which originates from a number of factors:

£24bn takeover was the biggest ever tech deal in the UK, and the majority of that value can be attributed to ARM’s patent portfolio.

So the reasons are many and varied, but the message to UK companies is clear: your international competitors are likely to be filing more patents than you, and you need a strategy that takes this into account. This might involve filing more patent applications, or simply becoming more aware of your competitors’ patent portfolios.

Withers & Rogers is one of the leading intellectual property law firms in the UK and Europe. They offer a free introductory meeting or telephone conversation to companies that need counsel on matters relating to patents, trademarks, designs and strategic IP. For more information call 020 7940 3600 or visit www.withersrogers.com

[1] EPO Facts and figures 2016, page 15

Jim Ribeiro

Matthew Pennington

These are interesting times for Six Degrees Group. Launched in 2011, its strategy was to combine the capabilities offered by a true data centre and converged network operator with the flexibility and service-creation skills of an agile startup.

With a focus on mid-market customers, the company has grown to a £100m business. Highly acquisitive over its first five years, buying 19 businesses, original owner Penta sold 6DG to new backers, Charlesbank Capital, a Boston and New York-based investment business with more than $3bn of assets under management in 2015.

Following the change of ownership, Six Degrees has more recently undergone a change in leadership with the appointment of David Howson as CEO in February this year. Upon his appointment, Howson who previously held senior positions at Zayo and Level 3 Communications, said he was looking forward to “helping Six Degrees establish itself as the market leader for mission-critical managed services and to drive growth both organically and through strategic acquisitions”.

Howson spent his first 60 days looking at the company’s capabilities, strengths and opportunities and to shape his view on strategy and what had already been put in place by the company’s founders. He also talked to many customers and clients. “I have spent a lot of my career in front of customers and I used that experience heavily in this process,” he reveals.

One of the first things Howson has done is to change the organisational structure of 6DG to align with three key areas: advanced solutions, converged solutions and partner solutions. These key business units are backed by two platform units, Network Services and Platform Services, and the company has consolidated its product offerings under the Six Degrees Group brand.

The reorganisation comes on top of a major investment in the internal platform using ServiceNow for customer experience and Tableau for live management information. Howson says the ServiceNow investment is already delivering value and will provide the foundation to significantly scale the business.

One of the company’s aims this year is to combine the capabilities from its 19 acquisitions into a converged offering for the mid-market and develop a set of specialisms that are better matched to clients to drive higher growth.

“The key is alignment,” Howson says. “We are integrating the five standalone companies. We are making sure all our services are available to all our clients.” He believes that the company’s strengths in individual capabilities should put it in an even stronger position when it converges them for customers.

Howson is keen to stress that the company will not deviate from its commitment to owning its own infrastructure. “It’s about delivering on customer SLAs and that is the main reason why we own our infrastructure.”

In terms of verticals, Six Degrees is particularly strong in financial services and retail, but the company plans to become more heavily involved in other sectors going forward. When it comes to operating in other countries, Howson stresses that the company intends to continue its focus on the UK market for now but it can deliver international capabilities for UK customers with overseas needs if they want to deal with a single converged provider.

After making 19 acquisitions in five years, Howson says Six Degrees is concentrating on “getting to grips with the acquisitions”. There are no immediate plans for more M&A activity in the near future, “We are internally and customer focused at present, but are always seeking inorganic opportunities that create additional value,” he adds.

For now, most of the focus is on bedding in the new operational model and ensuring top line and EBITDA growth. “Going forward, we aim to make sure all parts of the business are growing, although some will grow faster than others,” Howson states. “The plan is to grow revenue and EBITDA.”

The modular UPS sector is on the rise, presenting uncapped opportunity for business. Leo Craig, general manager of Riello UPS, offers his guidance on the action businesses should be taking to maximise modular scale-up.

The upward trajectory of the global modular UPS market shows no signs of abating. According to a report published last year by global research body, Frost & Sullivan, the market is expected to grow twice as fast as the traditional UPS market (forecast period 2015 – 2020), with a general acceleration in growth predicted post-2017.

Data centres continue to dominate market revenue in the modular UPS sector. Thanks to the internet of things, smart devices are fuelling huge demand on data centres and this is only going to increase. For data centres needing to achieve rapid expansion in order to keep pace with demand for increased processing, the modular UPS provides a raft of benefits.

Modular UPS solutions, which can be scaled up in tandem with the growing demands of a business- removing the risk of oversizing a UPS unnecessarily at the outset, offer the maximum in availability, scalability, reliability and serviceability whilst also ensuring high efficiency, low cost of ownership and a high-power density. And, whilst modular systems can be scaled up to meet increased demand, data centres can easily switch modules off too, guarding against under-utilisation. The modular UPS also addresses the issue of limited floorspace, which is increasingly an issue for data centres. Modular component UPS systems can be expanded vertically, provided there is room within the existing cabinet for additional UPS modules, or horizontally with the addition of a further UPS cabinet.

When it comes to maintenance, Modular UPS systems are marginally easier to service and repair in situ than a standalone UPS system because a failed UPS module can be ‘hot-swapped’. The failure or suspect module is then returned to a service centre for investigation. To return a standalone UPS system to active service may require a board swap.

Maintenance, of course, is a hot topic currently – in the wake of the UPS-related issues experienced by British Airways earlier this year, which had disastrous consequences for the business. As this example shows, the way in which maintenance is carried out needs to be carefully considered, whether you choose to implement a modular or centralised UPS system.

Human error is the main cause of problems occurring during maintenance procedures; engineers may throw a wrong switch, or carry out a procedure in the wrong order. But, whilst it might be easy to lay blame solely at the feet of the engineer in these instances, errors of this kind are often the result of poor operational procedures, poor labelling or even poor training. By ironing out these areas right at the start of the UPS installation, risks can be avoided.

For example, if the solution being deployed is a critical system comprising large UPS’s in parallel and a complex switchgear panel, castel interlocks should be incorporated into the design. Castel interlocks force the user to switch in a controlled and safe fashion, but are often left out of the design to save costs at the start of the project. This is a common occurrence and the client could pay dearly in the future if a switching error occurs.

Simple things can make a difference. By ensuring that basic labelling and switching schematics are up-to-date, disaster can be averted. Having clearly documented switching procedures available is recommended. If the site is extremely critical, the procedure of Pilot - Co Pilot (two engineers both check the procedure before carrying out each action) will prevent most human errors.

Any maintenance is typically intrusive into the UPS or switchgear, so managing this carefully is vital. Most problems that occur, including the failure of electrical components, are proceeded with an increase in heat. If a connect point isn’t tightened properly, for example, it will start to heat up and eventually fail in some way. Short of checking every connection physically, the most effective solution is thermal imaging. Thermal image cameras are relatively cost effective and easy to use these days, making them a worthwhile investment. Thermal image technology can identify potential issues that wouldn’t necessarily be picked up using conventional techniques, without the need of physical intervention.

Round-the-clock equipment monitoring also offers robust protection and should be part of the maintenance package, as UPS’s will alarm if any parameter of their operation is wrong – if an increase in heat, a fan failure or a problem with the batteries is detected, for example. It is highly unlikely that UPS failure will be limited to times when the engineer is carrying out the annual maintenance visit, so constant monitoring is critical.

Rigorous training is also vital and, to protect themselves, clients must ensure that the attending engineer is certified to carry out the work. It is the responsibility of the client to ask the maintenance company for proof of competency levels – pertaining both to the company itself and to the engineers it uses. Risk averse clients should also check ‘on the day’ that the engineer on site is competent and isn’t, for instance, a last-minute sub-contractor sent in because the original engineer is off sick.

A strong maintenance package should also ensure that when the UPS does fail, the response is timely and effective. Service level agreements need to be appropriate to the criticality of the application. There is no point having a maintenance contract for a UPS 24/7 response if access to the UPS can only be gained during normal business hours. Transversely, if operations are 24/7 and very critical to the business, then 24/7 response is a must.

Caution should be applied wherever maintenance contracts seem too good to be true – can a two-hour response really be guaranteed, for instance? Anyone who drives on the M25 might question this! It is also worth checking exactly what constitutes the ‘response’ - will it just be a phone call or will it be someone coming to site, and, if so, will that someone be a competent engineer? It’s important to pay attention to the guaranteed fix time too as it doesn’t matter how quickly an engineer arrives on site if the problem then takes a week to fix because of parts being delayed and so on.

Finally, if the UPS can’t be fixed with a certain timescale you need to understand what your course of redress is; will the UPS be replaced and so forth?

Maintenance continues to be a key concern for any business investing in a UPS – be that modular or stand-alone. Ease of maintenance is, no doubt, one of the differentiators helping to drive growth in the modular UPS market but, whatever UPS product businesses select, it is essential they apply proper due diligence to their maintenance approach. Watertight maintenance processes and procedures should be in place and relevant documentation must be easily and readily available. As well as ensuring that switches cannot be thrown by accident, businesses need to check that engineers are competent and should study the SLAs in maintenance agreements. By adding technologies like thermal imaging into the maintenance mix they will help to reduce the likelihood of issues further. Stringent maintenance processes should be the constant factor in an ever-evolving market.

A recent survey by Gartner predicted that ‘8.4 billion connected things will be in use in 2017’, an increase of 31 percent from 2016. Gartner also predicted that this figure will reach 20.4 billion by 2020. This just shows some indication of how quickly the IoT is growing, and will continue to grow in the future.

By Mike Kelly, CTO at Blue Medora.

The IoT is becoming more and more naturally integrated into daily life, and with this comes big opportunities for organisations. This increase in data means that businesses can gain the potential for both insight and competitive advantage. These benefits include analytics, new marketing strategies and operational productivities.

However, for businesses to transform this opportunity into revenue, the data needs to be securely analysed, stored and shared. In order to manage all of this data, databases have become much more complex, and consequently, IT teams are struggling with the burden of having to manage so much. Many IT professionals have to rely on disparate tools and out-of-date equipment to manage their database infrastructures, resulting in complication and inadequacies.

When working with the IoT, one of the most vital components is database technology, and because of this, it too has been rapidly developing. The transition from traditional SQL databases, to NoSQL, Open Source, Big Data, and cloud databases has come about fairly quickly, not including the swift adoption of both cloud infrastructure and virtualisation.

All of this combined makes for rapid evolution in the IT world, and it is this advancement in database space that allows the data analytics from IoT and Big Data to be used by many organisations and corporations around the world. It also aids the development processes used by IT teams that help the businesses perform highly.

It is this rapid growth of database technology that has caused database monitoring and management technology to fall decades behind. For the majority, today’s database monitoring capabilities are still aimed at on-premises, bare metal, traditional SQL world – nowhere near matching the necessities that enable businesses to make the most out of analysing their IoT data.

The essential tool for analysing data is a database monitoring system that can enable a comprehensive view of the data stack. This will mean that IT teams can easily understand their data, and the complex functions that are going on in their environments. If a business can effectively monitor their database layers to optimise their peak performance, as well as resolving any bottlenecks that may occur from the huge amount of data that can come from IoT devices, it will be in a far superior position than other organisations, as it will be able to use its collected data efficiently and expertly.

The Internet of Things has already begun to change how businesses perceive and use data. Yet IT teams are unable to analyse and understand their database infrastructures due to the vast amounts of data that is constantly being collected. IoT data will unfortunately make IT infrastructures and databases considerably harder to manage, and more complex. To tackle this, IT reams need to make sure they have a database monitoring system and IT management tools in place, in order to enable full visibility, reduce network complexity and to spot any underlying problems before they become issues. This way, businesses can make the most out of IoT data.

Dr. Alex Mardapittas, managing director of leading energy storage and voltage optimisation brand Powerstar, discusses why a battery-based energy storage solution provides a more modern approach to Uninterruptable Power Supply (UPS) and highlights the range of benefits the technology can provide for businesses looking to not only secure the supply for critical systems and data centres but also reduce energy costs.

We are living in a digital age, where an increasing number of items are connected to the ‘Internet of Things’ (IoT) and consumers are both consciously and unconsciously providing companies with a significant amount of personal data. With a greater number of public and private sector businesses placing more emphasis on ‘big data’ in regard to how it can produce vital statistics and potentially drive sales, it is perhaps obvious that the number of data centres is on the rise.

With increasing levels of data, come not only potential security issues, but also power problems. Recent information produced by the Payment Card Industry Security Standard Council (PCI SSC) has warned UK businesses they could face up to £122bn in penalties for data breaches. Alongside this, as the scale of data and the cost of electricity from both the National Grid and energy providers increases, it is predicted data centres will require three times as much energy as they do now in the coming decade, with all the financial implications this entails. With this in mind, it will be essential to have engineered energy solutions installed that ensure a constant, stable supply whilst reducing electricity consumption and CO2 emissions.

As recently documented in the national news, power supply quality issues, such as blackouts, brownouts, voltage spikes and dips can cause significant damage to highly sensitive areas, resulting in major disruption for companies and their customers. Keen to avoid the implications of data centre power failures, many facilities implement a full backup power or Uninterruptable Power Supply (UPS) functionality.

Historically, backup power has been provided by the use of combined heat and power (CHP) units or generators, both of which are still present across many facilities within the UK. However, even though CHP and generators provide energy off the grid and offer sufficient backup power, they are ageing systems in a technologically advanced world. They also do not allow power to be provided instantly to the load - a data centre for example – when required.

In contrast, one of the most recently discussed and effective UPS innovations is large scale battery-based energy storage technology, ranging from at least a 50kW output. Battery-based energy storage simply replicates what you would find on most electrical devices, in that the battery will charge from the National Grid, or if possible from renewable sources, and will store it to provide energy to a load, or facility, almost immediately when required.

Even though it is a modern solution, battery-based energy storage is already in operation within data centres across the world and provides a host of UPS benefits. A bespoke engineered storage solution will constantly monitor and measure electricity supply to the load, and will intelligently recognise when support is required, such as during high demand periods on the National Grid or when power quality dips. When the support is triggered, the batteries will respond automatically within a three-millisecond timeframe, providing electricity to the sensitive load during a period of up to two hours.

The nature of battery-based energy storage technology also provides a ‘future proof’ solution for high-technology critical locations, such as data centres. Frequent changes in the processing power and speed of IT equipment, along with a range of electrical equipment connected to wireless controls and the ‘Internet of Things’ (IoT) means more scalability is required. By having a bespoke engineered energy storage solution, provided by a company that has undertaken a full site survey prior to installation, it is a simple process to understand the capacity required by the current energy storage system and plan for any future batteries that may need to be installed and connected if and when demand increases.

Alongside the requirement for UPS, some of the most widely recognised and popular energy providers have been increasing tariffs for electricity use in both commercial and residential properties, even though Ofgem has recently reported that there is no clear reason why this should be the case.[1] What’s more, the National Grid is compounding energy price concerns faced by businesses that consume moderate to high levels of electricity, such as data centres, by increasing DUoS (Distribution Use of System) and Triad tariffs.

[1] https://www.ft.com/content/eadef124-de59-11e6-9d7c-be108f1c1dce

DUoS and Triads are established tariffs that the National Grid place on businesses for consuming energy at periods of high demand throughout the day. The charges can account for approximately 15-19% of a typical non-domestic electricity bill and seem to be unavoidable, as they are charged by the Distribution Network Operator that has a local monopoly on the supply of electricity.

To avoid DUoS and Triad tariffs some companies will reduce or switch off all electrical equipment at peak tariff times, usually Monday – Friday between 16:00 – 19:00 hours. However, it is evident that a shutdown procedure cannot be implemented in critical data centre facilities.

With DUoS tariffs being published in advance, the charge can be completely avoided by using energy storage technology. Such solutions can store the less expensive electricity generated at night or during off-peak periods, usually from 00:00 – 07:30, 21:00 – 24:00 and across the weekend. The battery technology will then be able to discharge the stored energy at a DUoS period, allowing companies to save up to 24% on electricity cost. Triads are more difficult to predict but due to available data, both current and historic, can still be very accurately predicted, allowing energy storage solutions to bring companies off the grid when a period is highly likely.

A bespoke energy storage solution does not only allow companies to use the stored energy to make savings, it also enables them to redirect electricity back to the National Grid, in order to generate additional revenue through Demand Side Response (DSR) incentives.

Supporting grid capacity through DSR using energy storage has the benefit of being significantly cheaper than maintaining electricity use through periods of high demand hours and, unlike diesel generators and CHP units, energy storage mediums have the ability to be connected to the National Grid, allowing instant electricity discharge. As a result, the technology will ensure businesses successfully respond to the majority of all DSR events.

However, as new incentives keep being added to the DSR scheme, it is likely that battery-based energy storage technology will be one of the only mediums that will be able to apply for future benefits, as it is a clean form of energy that can respond to changes in grid frequency within an 11-millisecond timeframe.

As the importance of UPS support for data centres continues to grow, it is critical for companies to use a reputable provider that can deliver a fully bespoke engineered solution, such as energy storage technology. Modern battery-based energy storage systems, in particular, provide a host of benefits for companies, including scalability, future security and a host of financial incentives.

Powerstar is a market leader in the industry, delivering a range of bespoke solutions that are designed and manufactured in the UK. For more information on voltage optimisation and energy storage visit the Powerstar website at www.powerstar.com

Crossrail selected ServiceNow to replace outsourced IT support and management system

Crossrail Limited is building a new railway for London and the South East, running from Reading and Heathrow in the west, through London to Shenfield and Abbey Wood in the east. It is delivering 42km of new tunnels, 10 new stations and upgrading 30 more, while integrating new and existing infrastructure.

The £14.8 billion Crossrail project is Europe’s largest infrastructure project. Construction began in 2009 at Canary Wharf, and is now more than 80% complete.

The new railway, which will be known as the Elizabeth Line when services begin in central London in 2018, will be fully integrated with London’s existing transport network and will be operated by Transport for London (TfL). New state-of-the-art trains will carry an estimated 200 million passengers per year. The new service will speed up journey times, increase central London’s rail capacity by 10% and bring an extra 1.5 million people to within 45 minutes of central London.

Crossrail plans to handover the new railway and all assets, including IT, to Transport for London once works are complete. Crossrail worked with Fruition Partners to implement ServiceNow to provide IT support during construction right through to handover.

Crossrail’s project nature means there is a high volume of joiners and leavers as employees are on-boarded to deliver contractual requirements and off-boarded once works are complete. This directly translates into a higher than normal volume of IT requests for new starters, movers and leavers amongst other IT requests.

Crossrail implemented a self-service solution that allows its users to easily make IT requests and ask for help, automating the delivery of requests for improved service and reduced operational cost.

Having decided to bring IT support in-house, ServiceNow was selected as the chosen solution through the standard procurement process using the Government’s Digital Marketplace (G-Cloud Initiative). ServiceNow demonstrated the best value for money and quickest return on investment allowing for quick adoption to generate savings before the transfer of assets to TfL in 2018.

Alistair Goodall, Head of Applications and Portfolio Management for Crossrail Ltd said, “ServiceNow is unique, with the grounded architecture we were looking for in terms of SaaS. It was our chosen solution for a quick route to market, with competitive prices making it the most cost-effective solution for Crossrail.”